Can you imagine that the images above were created by an artificial intelligence (AI) within just a few seconds? We registered as beta users on the most popular solutions Midjourney and DALL-E and summarized the most important things for you.

How to create images with artificial intelligence

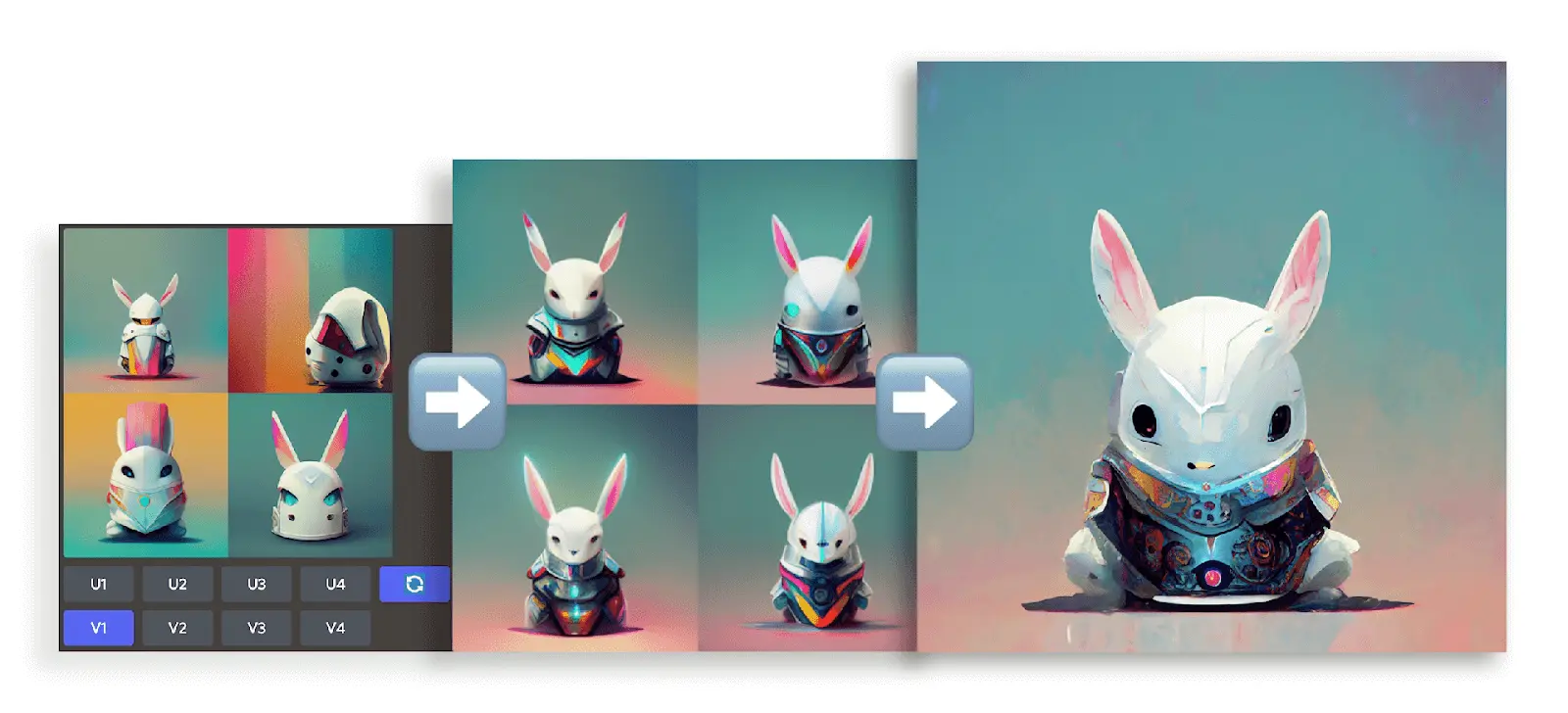

Using a series of short blocks of text, called prompts, you can tell the artificial intelligence what kind of image you want to create. For example, if you write «sketch of a futuristic dwarf rabbit with colorful armor», the artificial intelligence will compile four suggestions at the beginning.

Subsequently, further variations of the individual suggestions can be created or more details can be generated. In this way, one can refine and concretize one's idea in various steps (V1-4). If none of the suggestions is suitable, four completely new suggestions can simply be created (🔄). If you are already satisfied with one, you can use the «Upscale» option to make it play out with even greater detail (U). This way you can create great images in just a few steps.

Midjourney - Process to the final version

It is similar with DALL-E. Besides the variations, you can even upload your own images or replace single areas of a photo. This also works by entering text blocks. You simply describe what you want to change. This way, objects can easily be inserted or replaced at a different place:

DALL-E - Replace and add prompts

Finding the right text blocks (prompts)

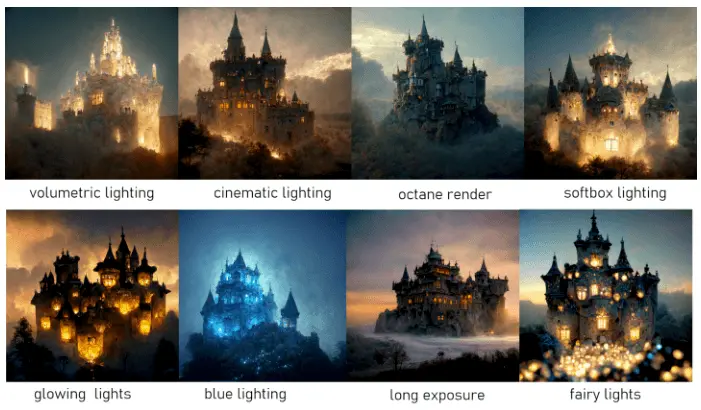

As you can imagine, there are an incredible number of ways to describe an image. However, certain building blocks or guidelines can help you get to the desired result faster. For example, you can describe the style, mention artists, specify the format or even describe the nature of the light and much, much more. On this topic we recommend the detailed article by Lars Nielsen, which, as in the illustration below, also explains with examples how the search terms adjust the appearance of your work.

Using the right lighting terms in your prompt - by Lars Nielsen via Medium

How does automatic image generation (text-to-image) work?

How does the AI even know what such a lock looks like? Or even more abstractly, how does an image look like? For an AI, an image consists only of numbers or brightness values - and these data must be converted by the so-called transformers. The AI now trains on countless images and learns the so-called «latent space». In other words, which images are similar or have the same features. DALL-E, for example, was trained with over 650 million images. Thus, the AI has seen and compared numerous motifs, styles and other image features. If you were now to put all 650 million images on top of each other, you would come up with 325 kilometers, which is roughly the distance from Zurich to Venice.

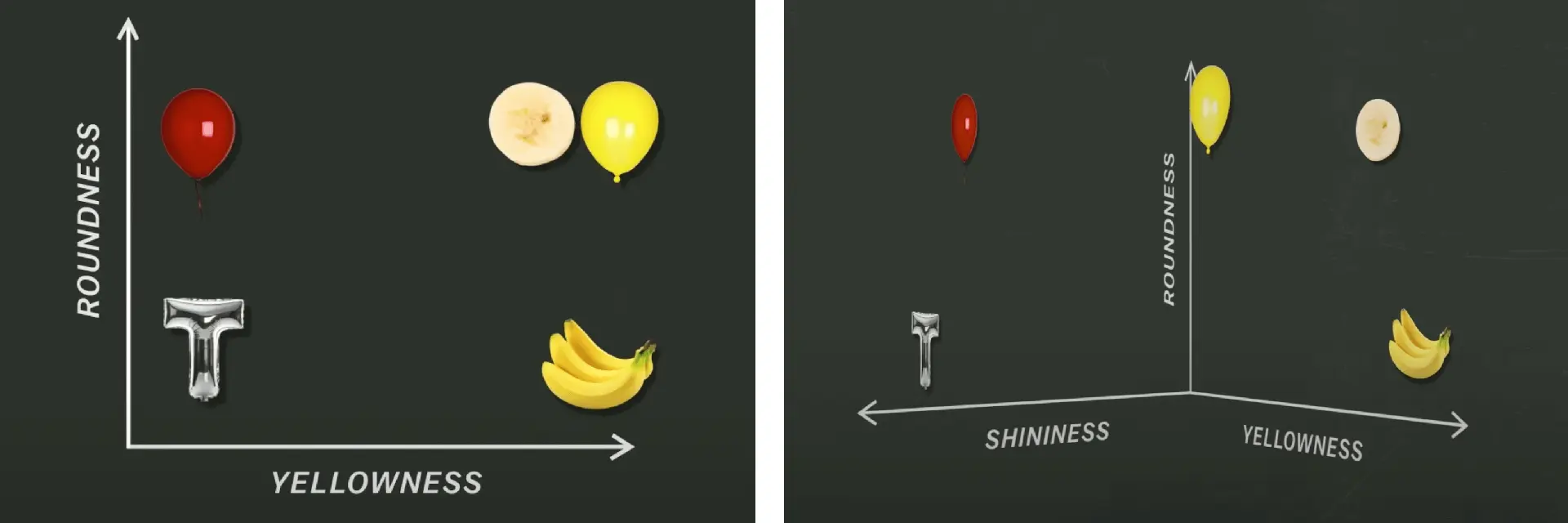

The AI thus learns the features of the objects all by itself. Put simply, it could be the two features «roundness» and «yellowness» of an object (see Figure 4). However, the cut banana looks too similar to the balloon. Thus, at best, the AI learns a third feature «Shininess» etc. Thus, over a longer period of time, many dimensions come together, with which the AI can classify an image or, conversely, also create it. (See therefore this short Youtube Video).

However, the features are not as understandable as we imagine them to be in the example above, but they are captured in a very abstract way. For example, the features of an AI which enables face recognition look like this:

Abstraction layers for face recognition.

In the higher layers of the Deep Learning architecture, even the eyes and facial features are visible on closer inspection, and since the images are always generated from «random noise», each image is unique.

How does the AI understand text input

Text understanding in an AI is not trivial. Earlier and simpler AI's have used the Bag of Word (BoW) approach. BoW calculates the importance of words and assigns them a number. See also one of our older blog posts «Teaching a Computer how to read».

This approach works well, but the AI runs the risk of losing context when multiple sentences follow each other. Moreover, ambiguous words can also change their meaning. To store context, an AI needs the ability to store past words. This is possible, for example, with a LONG SHORT-TERM MEMORY (LSTM) approach. To store context regardless of length, it needs a global memory called «attention». In 2017 a research team of Google published that an AI, only with the help of the «Attention», can already deliver amazing results.

This resulted in the «Transformers» - the latest hype in the Deep Learning field. Maybe GPT means something to you? GPT stands for «Generative Pre-trained Transformer». The summary in this blog is very basic, if you want to find out more we can recommend you the paper «Attention Is All You Need».

Technical limitations and challenges

If you want to display a word or even a sentence on your image in DALL-E, you will quickly notice that this currently does not work very cleanly. This is simply because the AI has not been trained to do so and, in the case of DALL-E, has not yet sifted through enough images to do so. The AI created by Google, called Google Parti, can already solve this problem according to its own information, but 20 billion images were also necessary for this.

A green sign that says «Very Deep Learning» and is at the edge of the Grand Canyon. Puffy white clouds are in the sky.

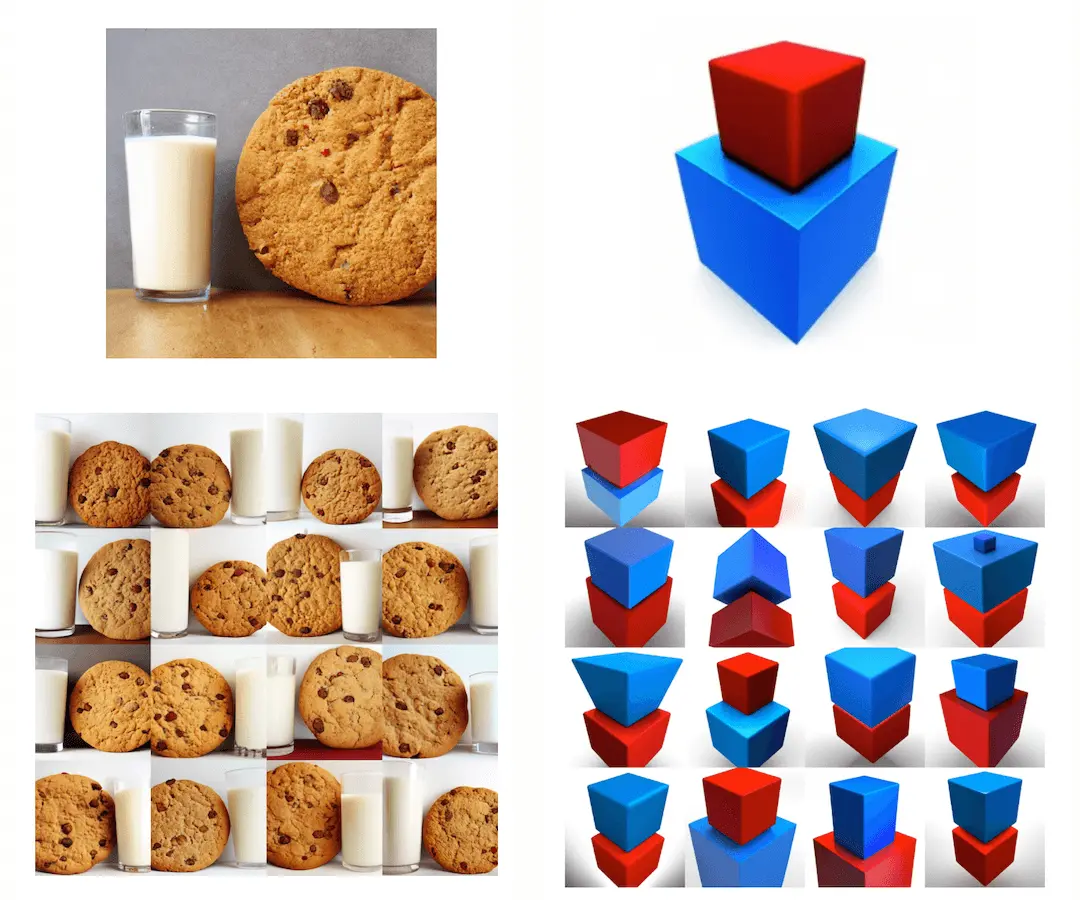

In addition to the comically described road sign, there are also things that one would not expect at all and that show that the technology is still in its infancy. For example, the following examples show problems with the dimensioning or the consistency of the color order when adjusting a text block.

Examples of current technical limitations

Socio-demographic influences and concerns

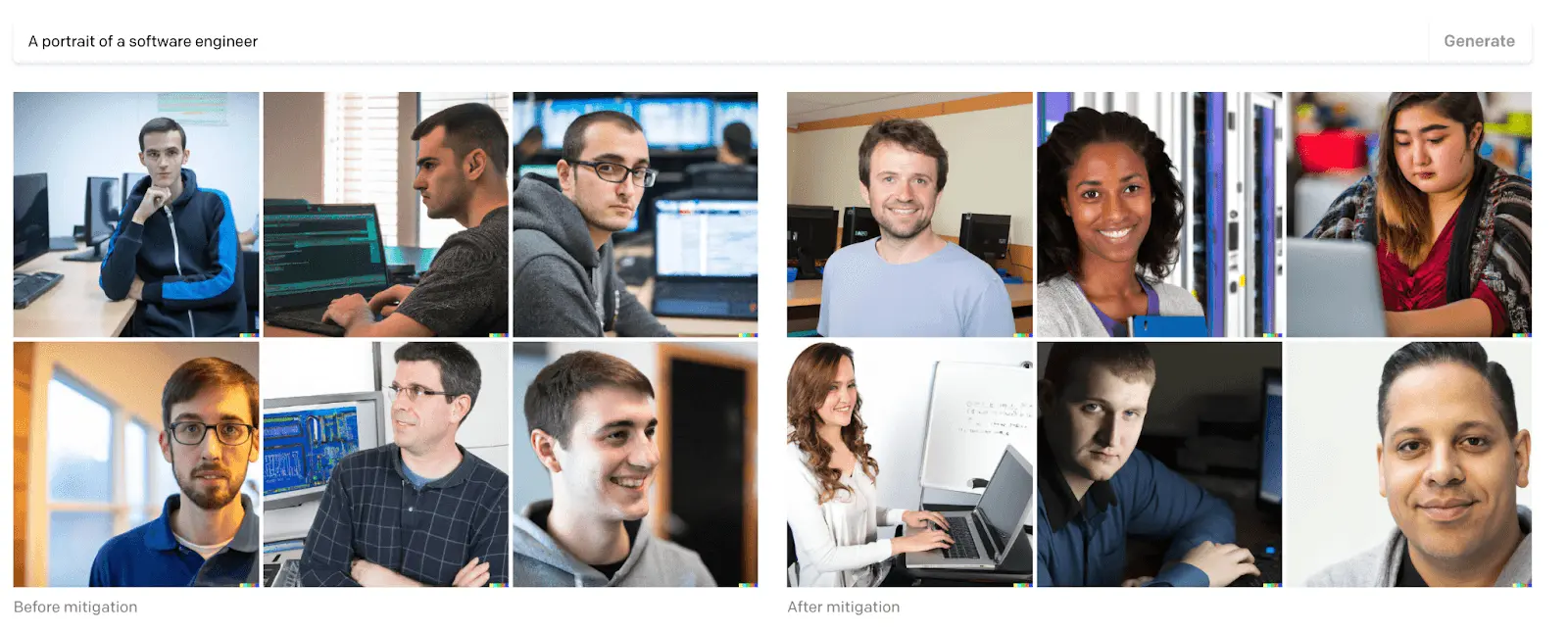

Another issue that arises due to human biases is, for example, stereotypes that were initially encouraged by AI. As in the case of the term software engineer, more western white men have tended to be displayed, or in the case of «unprofessional hairstyles» mainly dark-skinned women. However, this is of course related to the database with which the AI has been trained, and steps have already been taken, at least by DALL-E, to counteract this problem.

Pictures of a software engineer before and after the adjustment by DALL-E.

There are also concerns about the widespread use of such tools. Even Google, in its written principles, is of the opinion that not everyone should have access to them, since problems like Deep Fake or Fake News can be created relatively easily. See also «It's Getting Harder to Spot a Deep Fake.»

Conclusion

The current version of Midjourney and DALL-E are powerful tools and are great for letting your creativity run wild. At the same time, one must also be aware that with this technology comes a responsibility that should not be used for malicious purposes.