What are flaky tests?

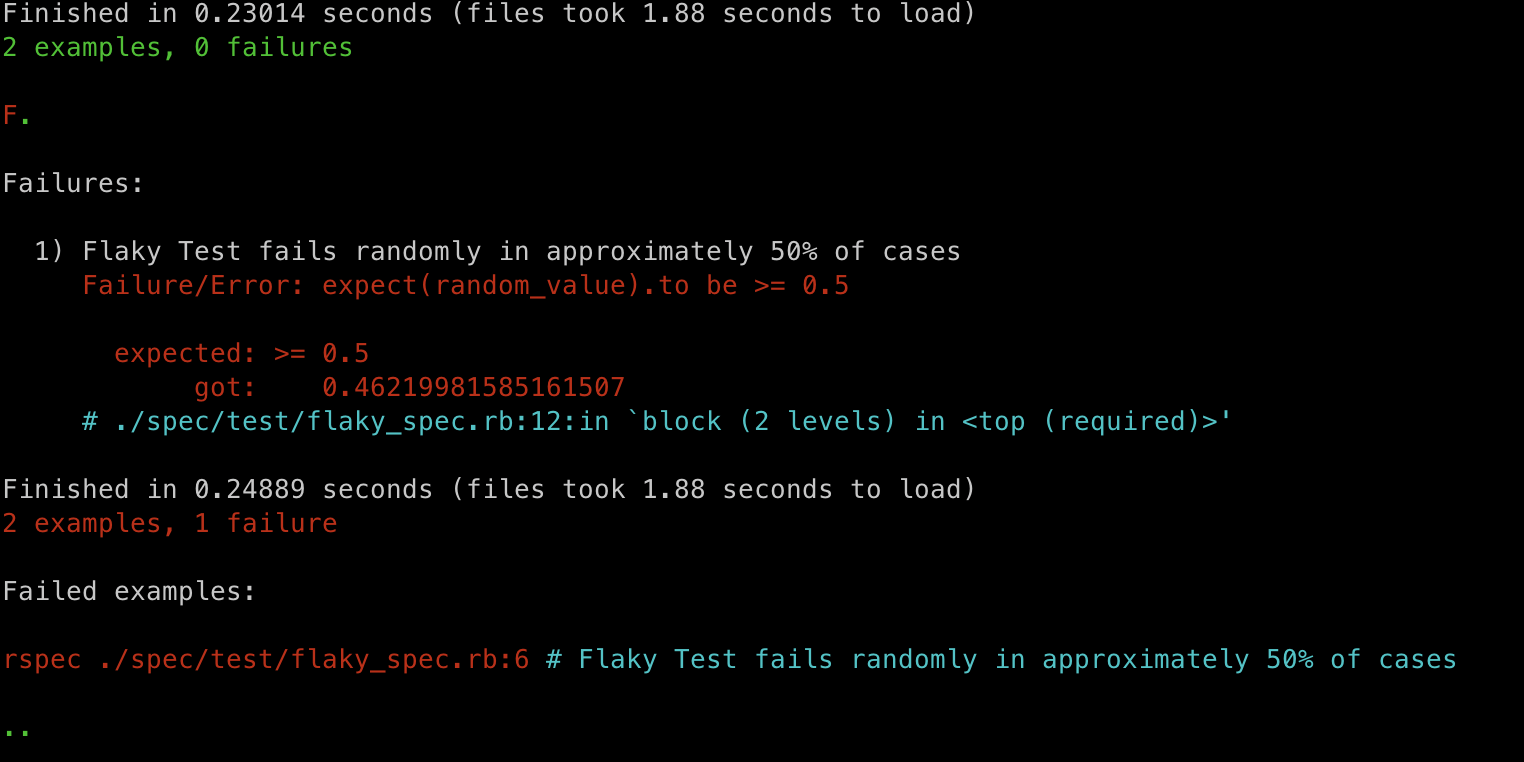

A flaky test is one that passes in some runs but fails in others, without any changes to the underlying code. These inconsistent results are typically caused by non-deterministic behavior in the application or the test environment. Common causes include:

- Tests that rely on execution order

- Tests that interact with elements before the page has fully loaded

- Race conditions or timing issues

At first glance, the easiest way to deal with a flaky test is to rerun it. In fact, there are tools designed to automatically retry tests upon failure. But is rerunning really a solution or are we just postponing a problem?

Short-Term Gain, Long-Term Pain

You might argue that ignoring flaky tests saves time. Fixing them takes effort, and deadlines are tight. But this is where the sunken cost fallacy creeps in: the more time you spend rerunning failing tests, the more you convince yourself it’s acceptable, even normal.

Over time, your test suite becomes bloated and slow. You start to question every failure:

Is this an actual bug or just another flaky test?

You rerun it. It passes. You move on. Confidence in the test suite erodes and you start to manually test a lot more.

The Real Problem Emerges

This strategy breaks down completely when multiple flaky tests start appearing. Now, no matter how many times you rerun the test suite, one test always fails, but it’s never the same one.

You’ve trained your team to ignore failures instead of fixing them. Instead of addressing root causes early, you’ve buried them under layers of retries and reruns, until your CI pipeline becomes unreliable and your team spends hours fighting fires.

It Gets Worse When You’re Context-Switching

Flaky tests often block you during unrelated work. You’re trying to finish a feature or fix a bug, but unrelated tests fail, and you can’t merge. You’re forced to dive back into unfamiliar parts of the codebase to fix issues you thought you could ignore. That context-switch is costly, especially if the test was written by someone else and you have to rediscover all its assumptions from scratch.

Easy Fixes Do Exist

Most flaky tests are system tests. These simulate user behavior in a browser and are sensitive to timing and page load issues. Fortunately, some fixes are straightforward.

Consider this RSpec system test (Rails):

find("button.bg-danger", text: "Abmelden").clickexpect(page).not_to have_content("Admin")This may fail intermittently because expect(page).not_to... executes before the logout process completes.

A more reliable structure would be:

find_button("Abmelden").clickexpect(page).to have_content(I18n.t("devise.sessions.signed_out"))expect(page).to have_no_content("Admin")Here, we first wait for a confirmation message before asserting that the user has been logged out. This change avoids race conditions and improves stability.

But Some Fixes Take Time

Not all flaky tests are easy to fix. Some require deep investigation, discussions with teammates, or research. When you ignore these problems instead of solving them while the context is fresh, you create technical debt. When the problem resurfaces weeks later, it’s harder to resolve because you’re no longer familiar with the details.

Conclusion

Flaky tests are more than just an annoyance, they are a compounding liability. Ignoring them might save time today but will cost you far more tomorrow. The longer you defer the fix, the more painful and disruptive it becomes.

If your test suite doesn’t give you confidence in your software, what purpose does it serve?

Fix flaky tests early. Don’t let them accumulate. Treat them as bugs because that’s precisely what they are.